Slim down by using a for/in loop#31

Slim down by using a for/in loop#31kddnewton wants to merge 1 commit intodevelopit:masterfrom kddnewton:slim

Conversation

|

Really well done! |

|

So fun to do this 😆I had the same thing inside I'm definitely all for cheeky golfing, but I think should maybe do a before/after comparison of this change. |

|

From: I was going to shave a bit more using template literals Whatever, I think there should be a |

|

The other solution I came up with was: export default function dlv(obj, key, def, p, undef) {

key = key.split ? key.split('.') : key;

for (p = 0; obj && p < key.length; obj = obj[key[p++]]);

return p < key.length || obj === undef ? def : obj;

}which does bail out early for deeply nested objects. It depends on if you want the trimmest version possible or the most efficient. If you're going for efficiency then I can change over to this one. Stats for that: It's a difference of 17 bytes, so up to you. |

|

Bailing out early is what dlv has been doing before my PR - #30 - but I changed it to reduce size and I still believe that early fallout is not common nor does it give any substantial win to justify a more complicated code. |

|

I agree! But this is an episode of "what has been seen cannot be unseen" :) |

|

@jonasraoni FWIW this one

was pretty cool, but results in an actually larger size after compression (as shown by |

|

Just a note. forin loops are a lot less performative then normal for loops. I don't think this should aim for fewer bytes in the module rather than performance. IMHO Even if we double the byte size, if we increase the performance I think it's still valid. |

That's precisely the goal of this helper library AFAIK. If someone cares about performance, they shouldn't use string-based dynamic paths in the first place, but when they do, microimprovements of loops are not going to change much. |

|

If performance is not a goal, then array reduce gives a smaller implementation, as pointed out in a few existing PRs: #8 I got: with: |

|

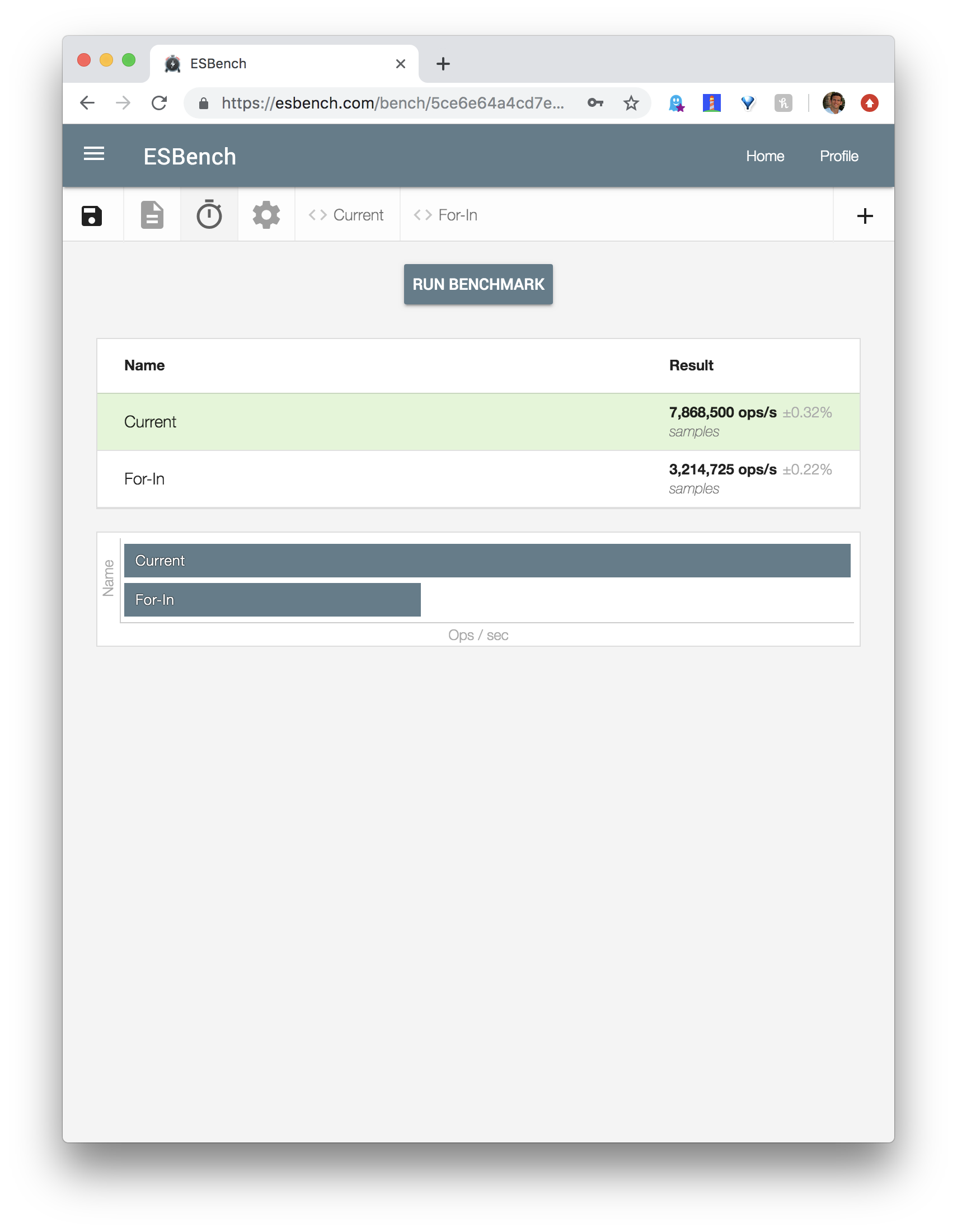

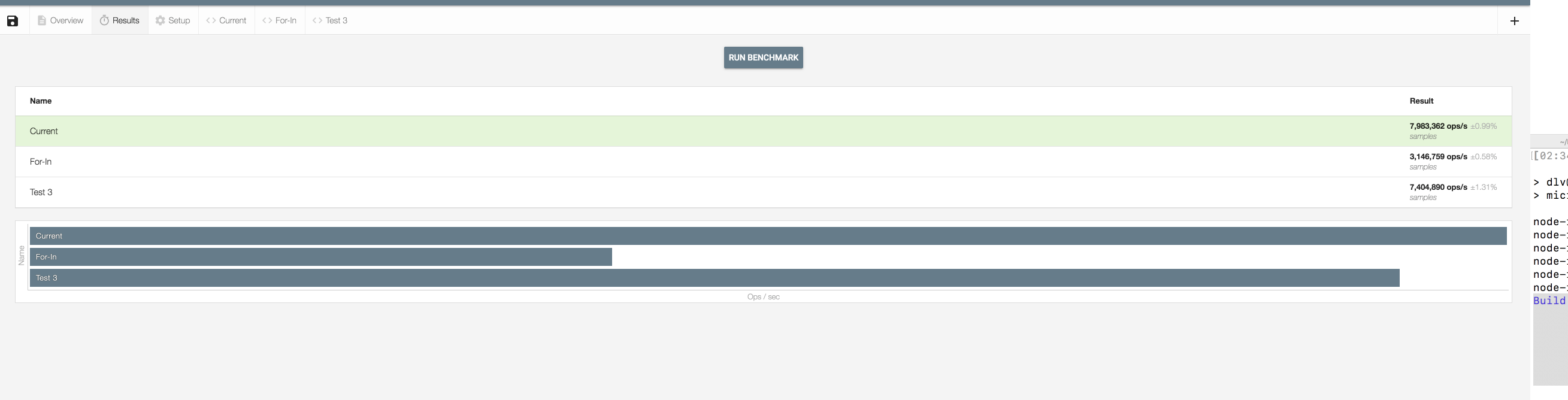

Jason's libraries are typically a balance of code size and performance. That's why they're so great. I don't want to speak for him, but losing 13 bytes for less than half the performance is generally not worth it. |

|

Here's the reduce version added to that bench as "Test 3": |

|

Seems like reduce gives the size savings while only being 6% slower than for-in |

|

Sorry, actually smaller!!! for: |

|

I opened a PR for the reduce version: #36 |

for/in returns the index for arrays, so this just works. Passes all the tests.

Before:

After: